You can do so by appending an -exclude switch for each directory or file you want to exclude. In some cases, you may wish to compress an entire directory, but not include certain files and directories. Just list as many directories or files as you want to back up. You’d just run the following command: tar -czvf /home/ubuntu/Downloads /usr/local/stuff /home/ubuntu/Documents/notes.txt For example, let’s say you want to compress the /home/ubuntu/Downloads directory, the /usr/local/stuff directory, and the /home/ubuntu/Documents/notes.txt file.

#Untar a tar gz file how to

RELATED: How to Manage Files from the Linux Terminal: 11 Commands You Need to Know You’d run the following command: tar -czvf /usr/local/somethingĬompress Multiple Directories or Files at Once Or, let’s say there’s a directory at /usr/local/something on the current system and you want to compress it to a file named. Type one of the following commands and press Enter. Alternatively, you can also specify a source and destination file path when using the tar utility.

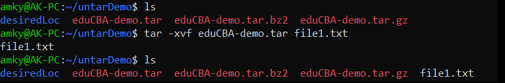

You’d run the following command: tar -czvf stuff With the command prompt open, use the appropriate commands to change the current working directory ( cd) to the location of the.

#Untar a tar gz file update

Will get back to you once I have an update from the internal team regarding the error from function apps.Let’s say you have a directory named “stuff” in the current directory and you want to save it to a file named. Going back to the second part of the ask, i.e., error from Azure function apps, let me reach out to Integration folks to better assist on, - FYI. You can see a sample that uses an Azure function to extract the contents of a tar file: Untar Azure File With Azure Function Sample Two options include Azure Functions and custom tasks by using Azure Batch ( Custom Activity in ADF). Here is an existing user voice feature request thread, I would encourage you to please up-vote and/or comment on the feature request suggestion to increase the priority of feature implementation.īut as a workaround you could try using the extensibility features of Azure Data Factory to transform files that aren't supported. Unfortunately there is no out-of-box functionality in ADF to extract contents from TAR file. tar.gz file is located, cd /directorypath To extract the contents of. If your data format is other than AvroFormat, OrcFormat, or ParquetFormat then you can try the compression settings in your source dataset connection settings (`GZIP` or TarGZIP) as shown below to decompress files. Open a terminal window ctrl+alt+t From the terminal, change directory to where your. Since this query has two areas of Azure (ADF, Azure Function), I would like provide my inputs on ADF part. Hi to Microsoft Q&A forum and thanks for your query. T16:06:42.721 ERROR: Program '7za.exe' failed to run: StandardOutputEncoding is only supported when standard output is redirected.At D:\home\site\e $InputBlob+ ~~~~~~~~~~~~~~~~~~~~~~.Exception :Type : :Exception :Type : : Program '7za.exe' failed to run: StandardOutputEncoding is only supported when standard output is redirected.At D:\home\site\e $InputBlob+ ~~~~~~~~~~~~~~~~~~~~~~.HResult : -2146233087CategoryInfo : ResourceUnavailable: (:), ParentContainsErrorRecordExceptionFullyQualifiedErrorId : NativeCommandFailedInvocationInfo :ScriptLineNumber : 9OffsetInLine : 1HistoryId : -1ScriptName : D:\home\site\e $InputBlobPositionMessage : At D:\home\site\e $InputBlob+ ~~~~~~~~~~~~~~~~~~~~~~PSScriptRoot : D:\home\site\wwwroot\toolsPSCommandPath azure-data-factory azure-functions

\7za.exe x 1.tar.gz below, the file is a blob in Blob Container - doesn't workīelow is the error I receive for the command above Set-Location D:\home\site\wwwroot\tools The tar file is stored locally and it works

Here's my command in run.ps1 in Functions tar.gz file uploaded to the Functions App but throws an for files stored in Blob container. So, I would do something like this: gunzip followed by a.

#Untar a tar gz file archive

I tried to use 7zip in Functions Apps, which worked fine to extract a test. Depending on OS, I've found it usually works better if you 'unzip' the file before 'untarring' it - instead of trying to do it all in one command. Basic syntax: tar -zxvf Where: - x tells tar to extract the files - v tells the command to list all of the files in the archive - z. tar.gz using Azure Data Factory or Functions App to be ingested by ETL process in ADF?

0 kommentar(er)

0 kommentar(er)